GLM-5 Now on SiliconFlow: SOTA Open-Source Model Built for Agentic Engineering

Feb 12, 2026

Table of Contents

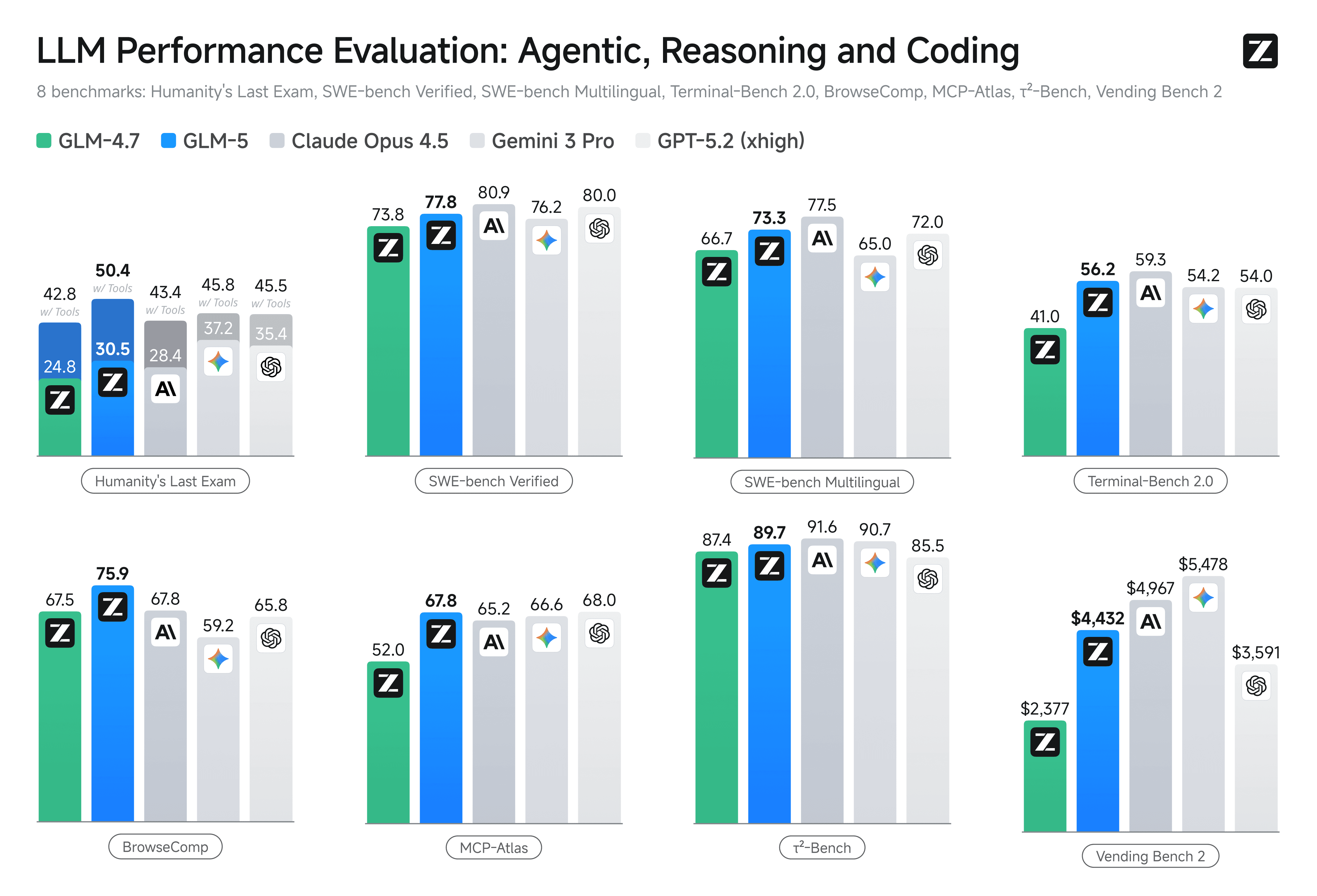

TL;DR: GLM-5 is now live on SiliconFlow. As Z.ai's most advanced open-source model, it is designed specifically for complex system development and long-horizon agent tasks. Built on a 744B-parameter MoE architecture (40B active per token) and trained on 28.5T tokens, GLM-5 delivers frontier results across coding (77.8% on SWE-bench Verified), agent execution (56.2% on Terminal-Bench 2.0), and long-context reasoning with DSA integration. Available on SiliconFlow at $1.0/M input | $3.2/M output tokens with a 205K context window, GLM-5 puts best-in-class agentic engineering at your fingertips — ready for production deployment at scale.

Andrej Karpathy's recent reflection captures where we are: "Programming via LLM agents is increasingly becoming a default workflow for professionals." The playful "vibe coding" era is only the beginning. What developers really need now are AI systems that execute reliably, not just occasionally.

That's exactly what GLM-5 delivers. As Z.ai's most advanced open-source model, it is engineered specifically for Agentic Engineering, targeting complex systems engineering and long-horizon agentic tasks. With 744B total parameters (40B active) trained on 28.5T tokens, it brings the consistency and reliability that production workflows require.

Now, through SiliconFlow's GLM-5 API, you can access:

Competitive Pricing: GLM-5 at $1.0/M tokens (input) | $3.2/M tokens (output)

205K Context Window: Integrating DeepSeek Sparse Attention to slash deployment costs without sacrificing long-context capacity.

SOTA Performance: 77.8% on SWE-bench Verified, 56.2% on Terminal-Bench 2.0 and 75.9% on BrowseComp.

Seamless Integration: Deploy instantly via SiliconFlow's OpenAI-compatible API to seamlessly integrate with Claude Code, Kilo Code, Roo Code, OpenClaw and more.

Whether you're building agents, orchestrating complex terminal workflows, or developing systems that require deep repository-level understanding, SiliconFlow's GLM-5 API delivers the agentic intelligence you need.

What's new about GLM-5

Compared to GLM-4.7, GLM-5 introduces major upgrades across model scale, post-training, and long-context efficiency:

Scaling up parameters and pre-training

GLM-5 grows from 355B total parameters (32B active) to 744B total parameters (40B active), while the pre-training corpus expands from 23T to 28.5T tokens. This larger-scale training investment substantially improves GLM-5's general intelligence and knowledge coverage.

Novel Asynchronous RL: Slime

GLM-5 introduces Slime, a new asynchronous RL infrastructure that substantially improves training throughput and efficiency, enabling more fine-grained post-training iterations.

With advances in both pre-training and post-training, GLM-5 delivers significant improvement compared to GLM-4.7 across a wide range of academic benchmarks and achieves best-in-class performance among all open-source models in the world on reasoning, coding, and agentic tasks, closing the gap with frontier models.

DSA Integration

For the first time, GLM-5 integrates DeepSeek Sparse Attention (DSA), significantly reducing deployment cost while preserving long-context capacity.

SOTA Benchmark Performance

With these advances in pre- and post-training, GLM-5 achieves best-in-class performance among all open-source models globally, narrowing the gap with frontier models like Claude Opus 4.5, Gemini 3 Pro, and GPT-5.2 xhigh:

SOTA Agentic Performance: GLM-5 redefines the open-source agent benchmark. It ranks #1 overall—even when compared against leading proprietary models—in web-scale research and synthesis (BrowseComp), while setting new standards for tool-calling precision (MCP-Atlas) and multi-step scenario planning (τ²-Bench).

Frontier-Level Engineering: The model's coding proficiency now aligns with Claude Opus 4.5 and surpasses Gemini 3 Pro. By achieving SOTA open-source scores on SWE-bench Verified (77.8%) and Terminal-Bench 2.0 (56.2%), GLM-5 demonstrates the reliability required for autonomous repository-level debugging and complex system engineering.

How it performs in the real world

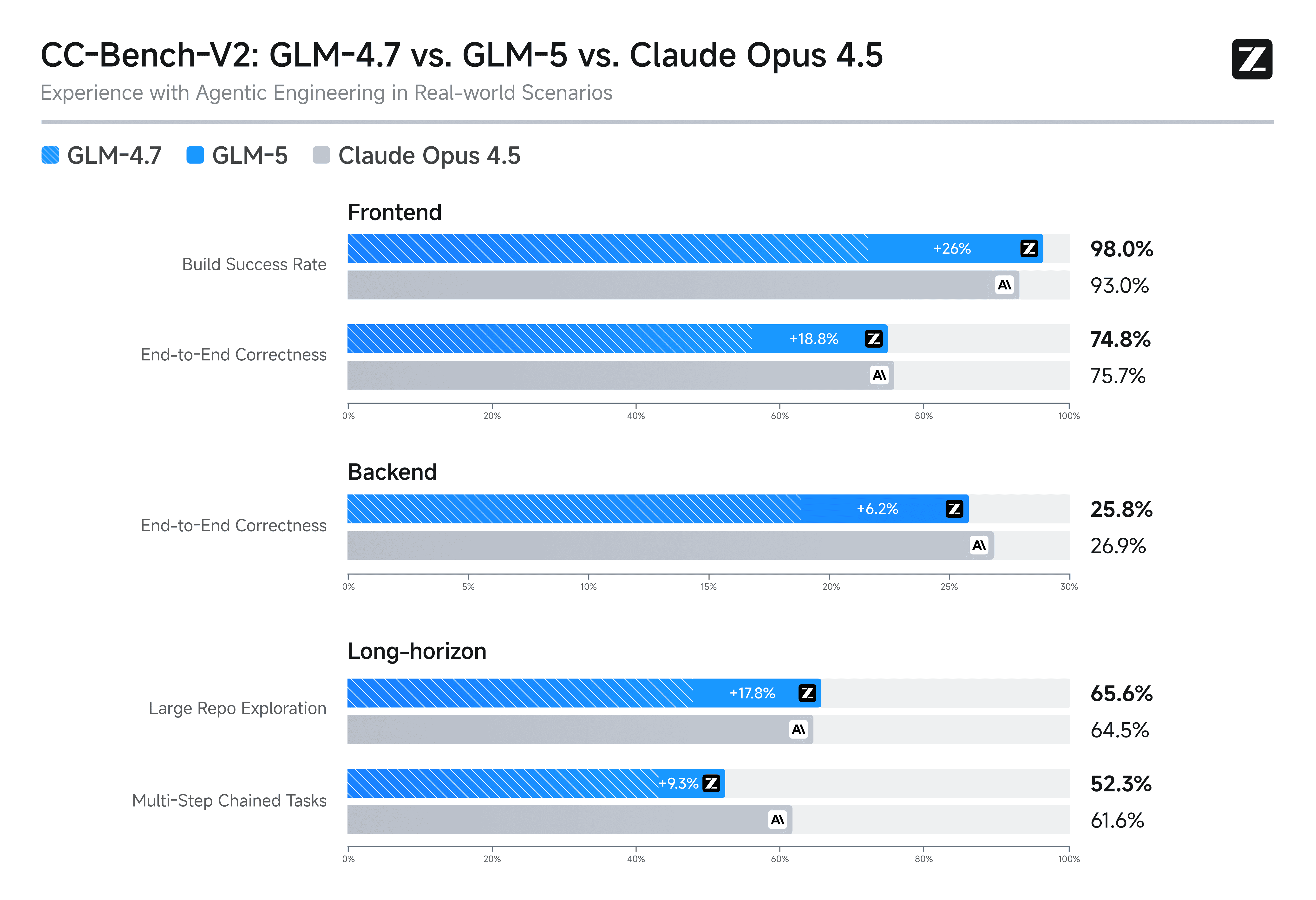

On Z.ai's internal evaluation suite CC-Bench-V2, GLM-5 significantly outperforms GLM-4.7 across frontend (98% on Build Success Rate), backend, and long-horizon tasks, narrowing the gap to Claude Opus 4.5.

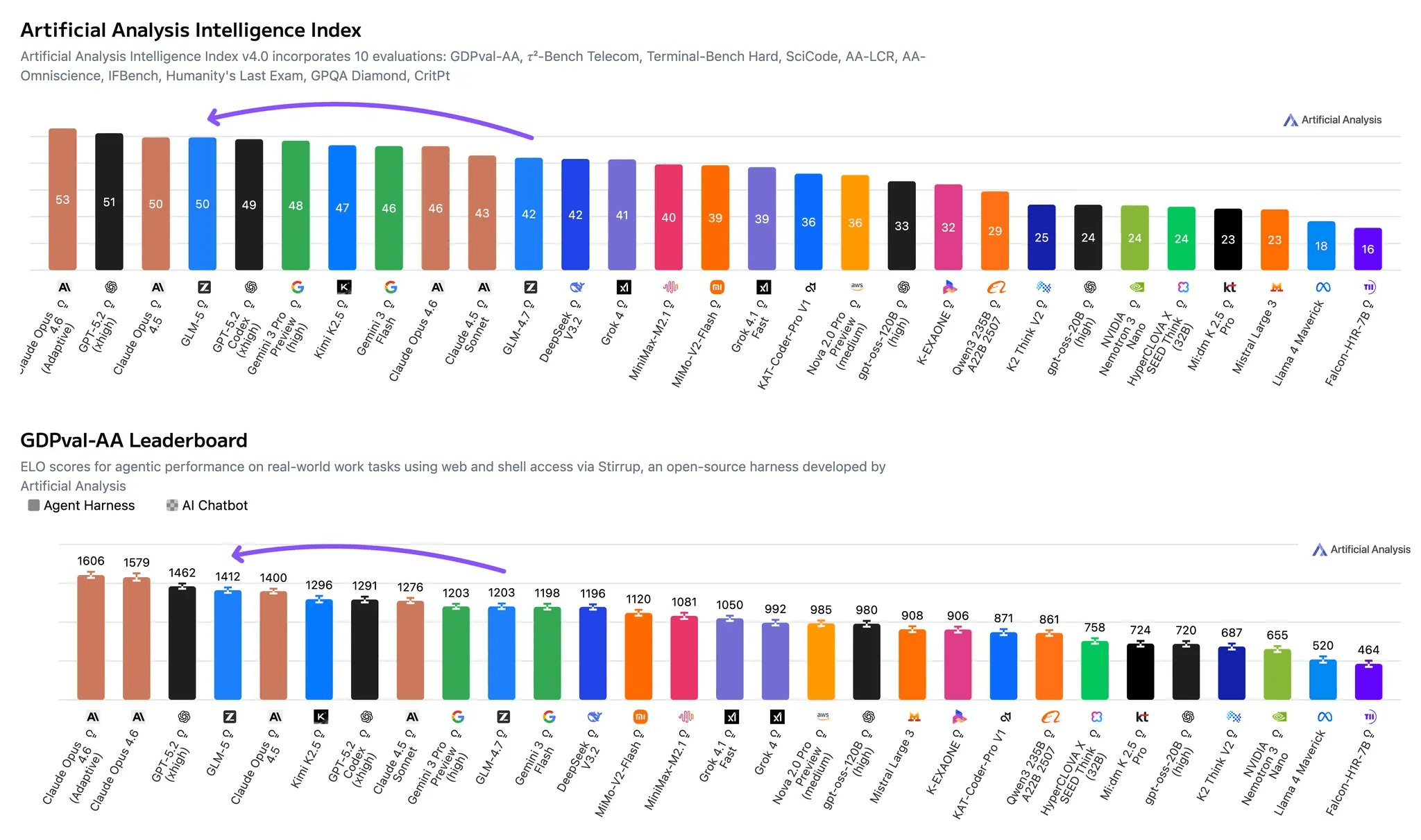

This leap in performance is further validated by the latest industry benchmarks from Artificial Analysis:

Intelligence Index: GLM-5 scored 50 on the Artificial Analysis Intelligence Index v4.0, making it the first open-source model to cross this threshold.

Agentic Index: It achieved an Agentic Index score of 63—the highest among all open-source models and ranking third overall.

Hallucination Control: The model also shows significant improvement on the AA-Omniscience Index compared to GLM-4.7, delivering the lowest hallucination rate of any model currently tested by Artificial Analysis.

Get Started Immediately

Explore: Try GLM-5 in the SiliconFlow playground.

Integrate: Use our OpenAI-compatible API. Explore the full API specifications in the SiliconFlow API documentation.