GLM-4.7 Now on SiliconFlow: Advanced Coding, Reasoning & Tool Use Capabilities

Dec 23, 2025

Table of Contents

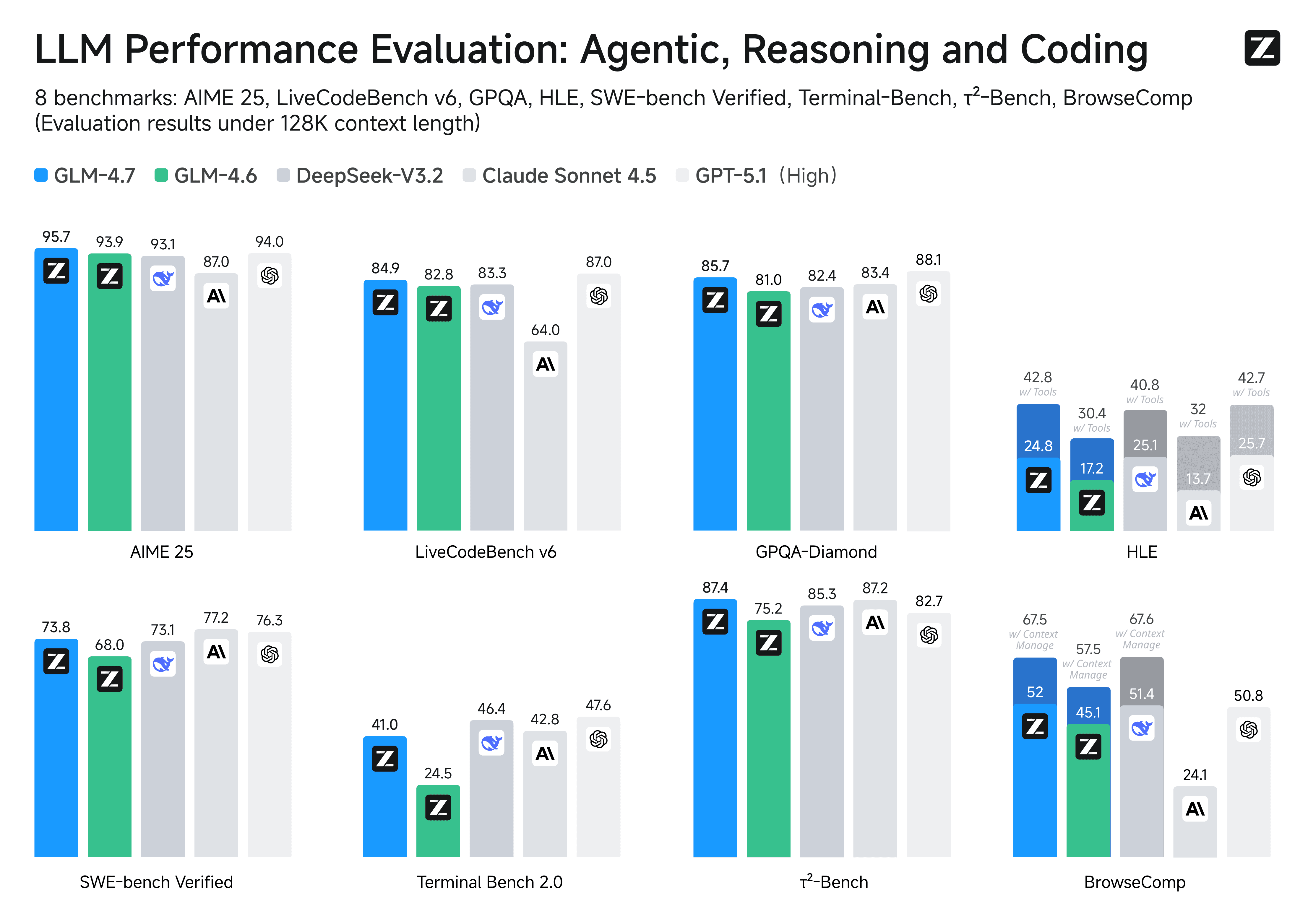

We're excited to announce that GLM-4.7, Z.ai's latest flagship model, is now available on SiliconFlow with Day 0 support. Compared with its predecessor GLM-4.6, this release brings significant advancements across coding, complex reasoning, and tool utilization — delivering performance that rivals or even outperforms industry leaders like Claude Sonnet 4.5 and GPT-5.1.

Currently, SiliconFlow supports the entire GLM model series, including GLM-4.5, GLM-4.5-Air, GLM-4.5V, GLM-4.6, GLM-4.6V, and now GLM-4.7.

SiliconFlow Day 0 support with:

Competitive Pricing: GLM-4.7 $0.6/M tokens (input) and $2.2/M tokens (output)

205K Context Window: Tackle complex coding tasks, deep document analysis, and extended agentic workflows.

Anthropic & OpenAI-Compatible APIs: Deploy via SiliconFlow with seamless integration into Claude Code, Kilo Code, Cline, Roo Code, and other mainstream agent workflows with significant improvements on complex tasks.

What Makes GLM-4.7 Special

GLM-4.7, your new coding partner, is coming with the following features:

Core Coding Excellence

GLM-4.7 sets a new standard for multilingual agentic coding and terminal-based tasks. Compared to its predecessor, the improvements are substantial:

73.8% (+5.8%) on SWE-bench Verified

66.7% (+12.9%) on SWE-bench Multilingual

41% (+16.5%) on Terminal Bench 2.0

The model now supports "thinking before acting" enabling more reliable performance on complex tasks across mainstream agent frameworks including Claude Code, Kilo Code, Cline, and Roo Code.

Vibe Coding

GLM-4.7 takes a major leap forward in UI quality. It produces cleaner, more modern webpages and generates better-looking slides with more accurate layout and sizing. Whether you're prototyping interfaces or creating presentations, the visual output quality is noticeably enhanced.

Advanced Tool Using

Tool utilization has been significantly enhanced. On multi-step benchmarks like τ²-Bench and web browsing tasks via BrowseComp, GLM-4.7 surpasses both Claude Sonnet 4.5 and GPT-5.1 High, demonstrating superior capability for complex, real-world workflows.

Complex Reasoning Capabilities

Mathematical and reasoning abilities see a substantial boost, with GLM-4.7 achieving 42.8% (+12.4%) on the HLE (Humanity's Last Exam) benchmark compared to GLM-4.6. Moreover, you can also see significant improvements in many other scenarios such as chat, creative writing, and role-play scenarios.

Whether it's coding, creativity, or complex reasoning — get started now to see what GLM-4.7 brings to your workflow.

Get Started Immediately

Explore: Try GLM-4.7 in the SiliconFlow playground.

Integrate: Use our OpenAI/Anthropic-compatible API. Explore the full API specifications in the SiliconFlow API documentation.