Table of Contents

TL;DR: Kimi K2.5 is now available on SiliconFlow. As the most powerful open-source model to date, it pairs state-of-the-art coding and vision capabilities with a self-directed Agent Swarm architecture, enabling coordination of up to 100 sub-agents and 1,500 tool calls. This results in up to a 4.5× speedup over single-agent execution. Powered by capabilities, K2.5 delivers strong performance across coding-with-vision, agentic workflows, and real-world office productivity scenarios. Start building today with SiliconFlow's API to bring SoTA visual agentic intelligence into production.

We are excited to bring Kimi K2.5 to SiliconFlow, unlocking a new era of visual agentic intelligence for developers worldwide. Built on Kimi K2 through continued pretraining over approximately 15T mixed visual and text tokens, it delivers state-of-the-art coding and vision capabilities as a native multimodal model. K2.5 also introduces a self-directed Agent Swarm paradigm that can coordinate up to 100 specialized sub-agents executing parallel workflows up to 1,500 coordinated steps, without predefined roles or hand-crafted workflows.

Now, through SiliconFlow's Kimi K2.5 API, you can expect:

Competitive Pricing: Kimi K2.5: $0.55/M tokens (input) and $3.0/M tokens (output)

262K Context Window: Handle long documents, complex conversations, and extended multi-agent workflows with rich visual inputs.

Seamless Integration: Deploy instantly via SiliconFlow's OpenAI-compatible API, or seamlessly integrate with Claude Code, Kilo Code, Roo Code, OpenClaw and more.

Whether you're building interactive front-ends from visual prompts, conducting complex research with parallel agent execution, or automating office workflows to generate expert-level documents and presentations, SiliconFlow's Kimi K2.5 API delivers the performance you need.

In the following sections, we'll break down K2.5's key features, showcase how it performs on real-world tasks via SiliconFlow's API and provide configuration tips to maximize performance for your workflows.

What's new about K2.5

See how it reconstructs a website from a single screenshot, refines the code through natural conversation, and turns design mockups into production-ready React components with animations. When tasked with market analysis, it can orchestrate 100 specialized researchers executing 1,500 coordinated steps in parallel.

This time, K2.5 delivers three fundamental features:

Native Multimodality

K2.5 excels in visual knowledge, cross-modal reasoning, and agentic tool use grounded in visual inputs—capabilities that stem from a fundamental insight in its training: at scale, vision and text don't compete, they reinforce each other. Pre-trained on 15T vision–language tokens from the ground up, K2.5 learns to see and code as one unified skill.

Coding with Vision

It doesn't just "see" images—it reasons across visual inputs and translates them directly into production-ready code. As the strongest open-source model to date, K2.5 excels particularly in front-end development. Show it a UI screenshot to debug layout issues, or upload a design mockup to generate interactive React components with animations and responsive layouts. This fundamentally changes how developers express intent and lowers the barrier to do so: instead of writing detailed specifications, you can show K2.5 what you want visually.

Prompt: Hey, I really love the whole vibe of Zara's website (screenshot attached)—you know, that clean, minimalist look with great typography and smooth animations. Meanwhile, I want to build a portfolio site for my design works. Could you help me create something in a similar style? Also, really important, I want to make sure the layout is solid with no overlapping elements or anything broken. Everything should be fully functional and look clean when I open it.

Agent Swarm

K2.5 shifts from single-agent scaling to a self-coordinating Agent Swarm—decomposing complex tasks into parallel sub-tasks executed by specialized agents.

Here's how it works: Ask K2.5 to identify the top three YouTubers across 100 niche professional fields. The orchestrator first researches and defines these diverse domains—everything from computational linguistics to quantum chemistry. Then it spawns 100 specialized sub-agents, each tasked with researching a specific field. These agents work in parallel, independently gathering data and analyzing content creators. The result? 300 comprehensive YouTuber profiles and a consolidated report—delivered in a fraction of the time a single agent would take.

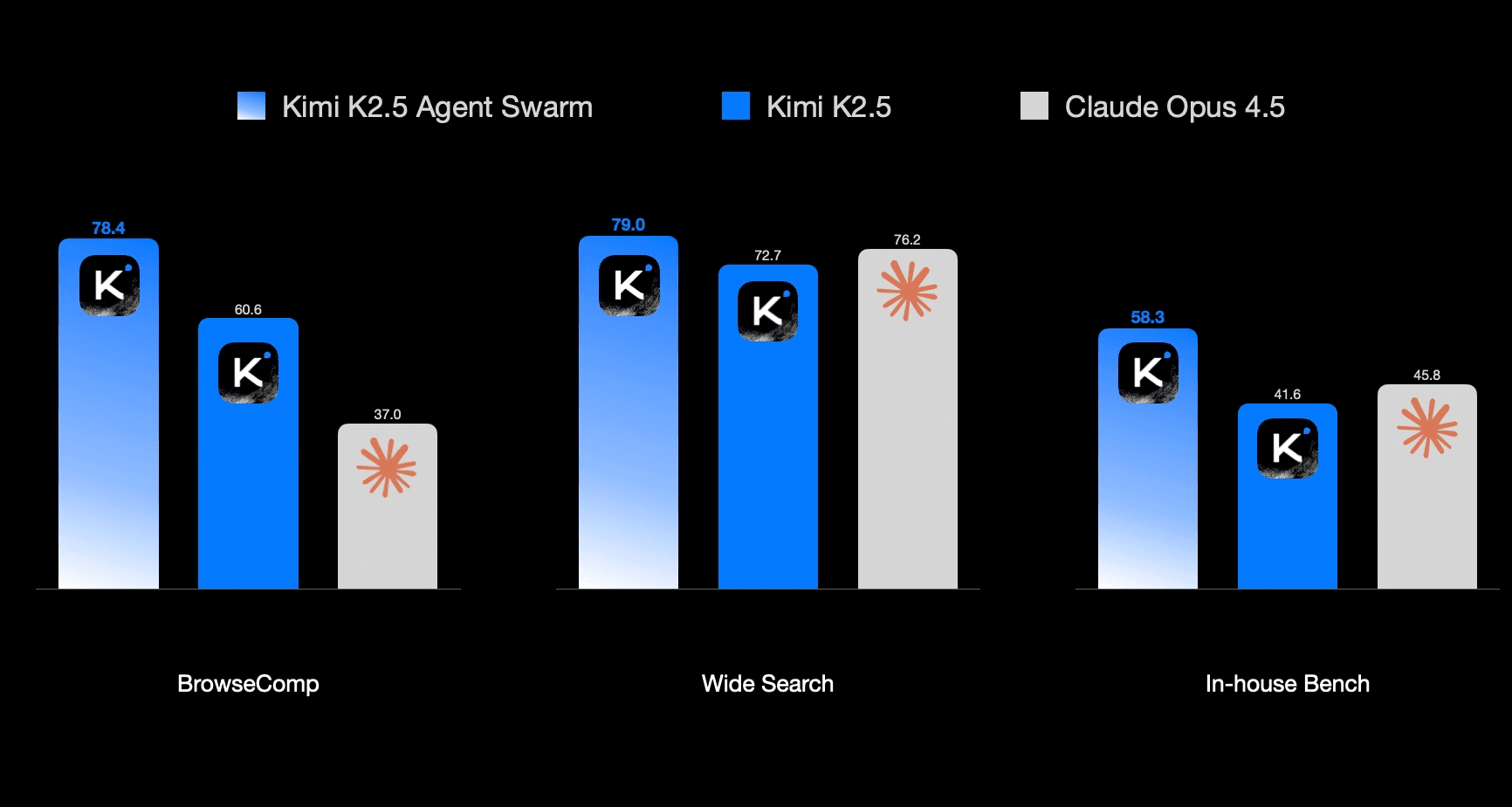

In Moonshot AI's internal evaluations, K2.5 Agent Swarm leads to an 80% reduction in end-to-end runtime while enabling more complex, long-horizon workloads, as shown below.

Benchmark Performance

Kimi K2.5 ranks #1 in Agentic benchmarks and achieves frontier-level performance across other major categories, placing it in the same tier as GPT-5.2, Claude 4.5 Opus, and Gemini 3.0 Pro. This comprehensive capability makes it ready for production deployment across diverse workflows:

Category | Benchmark | Kimi K2.5 | GPT-5.2 (xhigh) | Claude 4.5 Opus (extended thinking) | Gemini 3 pro |

Agents | HLE-Full | 🥇50.2 | 45.5 | 43.2 | 45.8 |

BrowseComp | 🥇74.9 | 65.8 | 57.8 | 59.2 | |

DeepSearchQA | 🥇77.1 | 71.3 | 76.1 | 63.2 | |

Coding | SWE-Bench Verified | 76.8 | 80 | 80.9 | 76.2 |

SWE-Bench Multilingual | 73 | 72 | 77.5 | 65 | |

Image | MMMU Pro | 78.5 | 79.5 | 74 | 81 |

MathVision | 84.2 | 83 | 77.1 | 86.1 | |

OmniDocBench 1.5 | 🥇88.8 | 85.7 | 87.7 | 88.5 | |

Video | VideoMMMU | 86.6 | 85.9 | 84.4 | 87.6 |

LongVideoBench | 🥇79.8 | 76.5 | 67.2 | 77.7 |

Since its release, Kimi K2.5 has also made waves in other evaluation arenas:

OSWorld (Agent Execution): Ranks #1 with 63.3% success rate, outperforming Claude Sonnet 4.5 (62.9%) and Seed-1.8 (61.9%) on real computer environment tasks.

DesignArena (UI Generation): Scores 1349—highest among all models, surpassing Gemini 3 Pro, Claude Opus 4.5, and GLM-4.7. K2.5 particularly excels in 3D design, website creation, and SVG generation tasks.

Vision Arena (Multimodal): Ranks #1 among open-source models and #6 overall with a score of 1249, ahead of GPT-5.1 (1238) in understanding and processing visual inputs.

Start Using K2.5 in Your Favorite Tools

Kimi K2.5 is now available on SiliconFlow. Integrate it into your development workflow through:

Kilo Code/Claude Code/Cline/Roo Code/ OpenClaw/SillyTavern/Kimi Code/Trae and more

K2.5 Configuration Tips

To get the best performance from Kimi K2.5 on SiliconFlow, follow these recommended settings:

Parameter | Recommended | Notes |

Instant Mode: Fast responses, everyday tasks | ||

enable_thinking | FALSE | Disables reasoning process for faster responses |

temperature | 0.6 | Optimized for consistent, focused outputs |

top_p | 0.95 | Standard sampling parameter |

Thinking Mode: Deep reasoning, complex problems | ||

enable_thinking | TRUE | Enables step-by-step reasoning |

temperature | 1.0 | Higher creativity for complex reasoning |

top_p | 0.95 | Standard sampling parameter |

Note: Video input is currently experimental and only supported by MoonShotAI's official API.

Get Started Immediately

Explore: Try Kimi K2.5 in the SiliconFlow playground.

Integrate: Use our OpenAI-compatible API. Explore the full API specifications in the SiliconFlow API documentation.